Monday, October 31, 2022

Researching our way to better research?

Tuesday, October 11, 2022

Trial participants and the luck of the draw

The guy in this cartoon really drew a short straw: most clinical trial participants, at least, know they were in a study. On the other hand, he was lucky that he was getting to hear from the researchers about the study's results! That used to be quite unlikely.

It might be getting better: a survey of trial authors from 2014-2015 found that half said they'd communicated results to participants. That survey had a low response rate – about 16% – so it might not be the best guide. There are quite a few studies these days on how to communicate results to participants, though, and that could be a good sign. (A systematic review of those studies is on the way, and I'll be keeping an eye out for it.)

Was our guy lucky to be in a clinical trial in the first place, or was he taking on a serious risk of harm?

An older review of trials (up to 2010) across a range of diseases and interventions found no major difference: trial participants weren't apparently more likely to benefit or be harmed. Another in women's health trials (up to 2015) concluded women who participated in clinical trials did better than those who didn't. And a recent one in pregnant women (up to May 2022) concluded there was no major difference. All of this, though, relies on data from a tiny proportion of all the trials that people participate in – and we don't even know the results of many of them.

I think a really thorough answer to this question would have to differentiate the types of trials. For perspective, consider clinical trials of drugs. Across the board, roughly 60% of drugs that get to phase 1 (very small early safety trials) or phase 2 (mid-stage small trials) don't make it the next phase. Most of the drugs that get to phase 3 (big efficacy trials) end up being approved: over 90% in 2015. The rate is higher than average for vaccines, and much lower for drugs for some diseases than others.

Not progressing to the next stage doesn't tell us if people in the trials benefited or were harmed on balance, but it shows why the answer to the question of impact on individual participants could be different for different types of trials.

So was the guy in the cartoon above lucky to be in a clinical trial? The answer is a very unsatisfactory, it depends on his specific trial! However, overall, there's no strong evidence of benefit or harm.

On the other hand, not doing trials at all would be a very risky proposition for the whole community. No matter which way you look at it, the rest of us have a lot of reasons to be very grateful to the people who participate in clinical trials: thank you all!

If you're interested in reading more about the history of people claiming either that participating in clinical trials is inherently risky or inherently beneficial, I dug into this in a post at Absolutely Maybe in 2020.

Monday, October 3, 2022

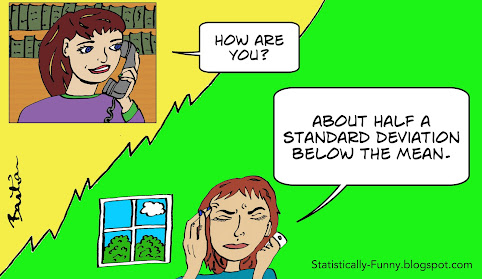

How are you?