She's right to be worried! There are

so many possible cracks that bias can seep through, nudging clinical trial results off course. Some of the biggest come from people knowing which comparison group a participant will be, or has been, in. Allocation concealment and blinding are strategies to reduce this risk.

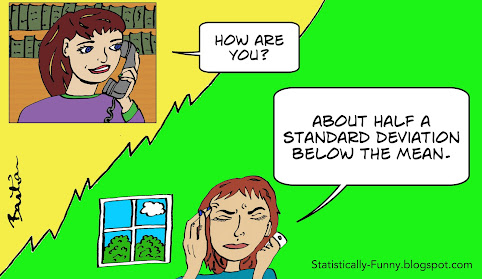

Before we get to that, let's look at the source of the problems we're aiming at here: people! They bring subjectivity to the mix, even if they are committed to the trial - and not everyone who plays a role will be supportive, anyway. On top of that, randomizing people - leaving their fate to pure chance - can be the rational and absolutely vital thing to do. But it's also

"anathema to the human spirit", so it can be awfully hard to play totally by the rules.

And we're counting on a lot of people here, aren't we? There are the ones who enter an individual into one of the comparison groups in the trial. There are those individual participants themselves, and the ones dealing with them during the trial - healthcare practitioners who treat them, for example. And then there are the people measuring outcomes - like looking at an x-ray and deciding if it's showing improvement or not.

What could possibly go wrong?!

Plenty, it turns out. Trials that don't have good guard rails for concealing group allocation and then blinding it are likely to exaggerate the benefits of health treatments (meta-research on this

here and

here).

Let's start with allocation concealment. It's critical to successfully randomizing would-be trial participants. When it's done properly, the person adding a participant to a trial has no idea which comparison group that particular person will end up in. So they can't tip the scales out of whack by, say, skipping patients they think wouldn't do well on a treatment, when that treatment is the next slot to allocate.

Some allocation methods make it easy to succumb to the temptation to crack the system. When allocation is done using sealed envelopes, people

have admitted to opening the envelopes till they get the one they want - and even going to the radiology department to use a special lamp to see through an opaque envelope, and breaking into a researcher's office to hunt for info! Others have

kept logs to try to detect patterns and predict what the next allocation is going to be.

This happens more often than you might think. A study in 2017 compared sealed envelopes with a system where you have to ring the trial coordinating center to get the allocation. There were 28 clinicians - all surgeons - allocating their patients in this trial. The result:

With the sealed envelopes, the randomisation process was corrupted for patients recruited from three clinicians.

But there was an overall difference in the ages of people allocated in the whole "sealed envelope" period, too - so some of the others must have peeked now and then, too.

Messing with allocation was one of the problems that led to a famous trial of the Mediterranean diet being retracted recently. (I wrote about this at

Absolutely Maybe and for the

BMJ.) Here's what happened, via a report from Gina Kolata (

New York Times):

A researcher at one of the 11 clinical centers in the trial worked in small villages. Participants there complained that some neighbors were receiving free olive oil, while they got only nuts or inexpensive gifts.

So the investigator decided to give everyone in the same village the same diet. He never told the leaders of the study what he had done.

"He did not think it was important"....

But it was: it was obvious on statistical analysis that the groups couldn't have been properly randomized.

The opportunities to mess up the objectivity of a trial by knowing the allocated group don't end with the randomization. Clinicians could treat people differently, thinking extra care and additional interventions are necessary for people in some groups, or being quicker to encourage people in one group to pull out of the trial. They might be more or less eager to diagnose problems, or judge an outcome measure differently.

Participants can do the equivalent of all this, too, when they know what group they are in - seek other additional treatments, be more alert to adverse effects, and so on. Ken Schulz lists potential ways clinicians and participants could change the course of a trial

here, in Panel 1.

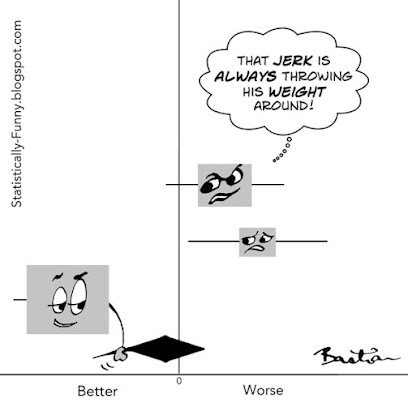

There's no way of completely preventing bias in a trial, of course. And you can't always blind people to participants' allocation when there's no good placebo, for example. But here are 3 relevant pillars of bias minimization to always look for when you want to judge the reliability of a trial's outcomes:

- Adequate concealment of allocation at the front end;

- Blinding of participants and others dealing with them during the trial; and

- Blinding of outcome assessors - the people measuring or judging outcomes.

Pro tip: Go past the words people use (like "double blind") to see who was being blinded, and what they actually did to try to achieve it. You need to know

"Who knew what and when?", not just what label the researchers put on it.

More on blinding here at Statistically Funny

6 Tips for Deciphering Outcomes in Health Studies at Absolutely Maybe.

Interested in learning more detail about these practices and their history? There's a great essay about the evolution of "allocation concealment" at the James Lind Library.