I used to think numbers are completely objective. Words, on the other hand, can clearly stretch out, or squeeze, people's perceptions of size. "OMG that spider is

HUGE!" "Where? What -

that little thing?"

Yes, numbers can be more objective than words. Take adverse effects of health care: if you use the word "common" or "rare", people won't get

as accurate an impression as if you use numbers.

But that doesn't mean numbers are completely objective. Or even that numbers are always better than words. Numbers get a bit elastic in our minds, too.

We're mostly good at sizing up the kinds of quantities that we encounter in real life. For example, it's pretty easy to imagine a group of 20 people going to the movies. We can conceive pretty clearly what it means if 18 say they were on the edge of the seats the whole time.

There's an evolutionary theory about this, called ecological rationality. The idea is, our ability to reason with quantities developed in response to the quantities around us that we frequently need to mentally process. (More on this in Brase [

PDF] and Gigerenzer and Hoffman [

PDF].)

Whatever the reason, we're just not as good at calibrating risks that are lower frequency (Yamagishi [

PDF]). We're going to get our heads around 18 out 20 well. But 18000 out of 200000? Not so much. We'll do pretty well at 1 out of 10, or 1 out of 100 though.

And big time trouble starts if we're reading something where the denominators are jumping around - either toggling from percent to per thousand and back, or saying "7 out of 13 thought the movie was great, while 4 out of 19 thought it was too scary, and 9 out of 17 wished they had gone to another movie". We'll come back to this in a minute. But first, let's talk about some key statistics used to communicate the effects of health care.

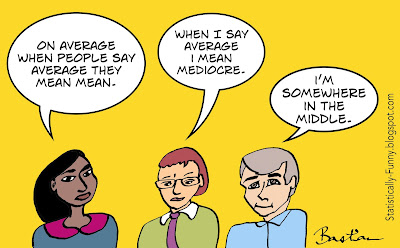

Statistics - where words and numbers combine to create a fresh sort of hell!

First there's the problem of the elasticity in the way our minds process the statistics. That means that whether they realize it or not, communicators' choice of statistic can be manipulative. Then there's the confusion created when people communicate statistics with words that get the statistics wrong.

Let's look at some common measures of effect sizes: absolute risk (AR), relative risk (RR), odds ratio (OR), and number needed to treat (NNT). (The evidence I draw on is summarized

in my long post here.)

Natural frequencies are the easiest thing for people generally to understand. And getting more practice with natural frequencies might help us to get better at reasoning with numbers, too (Gigerenzer again [

PDF]).

Take our movie-goers again. Say that 6 of the 20 were hyped-up before the movie even started. And 18 were hyped-up afterwards. Those are natural frequencies. If I give you those "before and after" numbers in percentages, that's "absolute risk" (AR). Lots of people (but not everybody) can manage the standardization of percentages well.

But if I use relative risks (RR) - people were 3 times as likely to be hyped-up after seeing that movie - then the all-important context of proportion is lost. That's going to sound like a lot, whether it's a tiny difference or a huge difference. People will often react to that without stopping to check, "yes, but from what to what?" From 6 to 18 out of 20 is a big difference. But going from 1 out of a gazillion to 3 out of a gazillion just ain't much worth crowing or worrying about.

RRs are critically important: they're needed for calculating a personalized risk if you're not at the same risk as the people in a study, for example. But if it's the only number you look at, you can get an exaggerated idea.

So sticking with absolute risks or natural frequencies, and making sure the baseline is clear (the "before" number), is better at helping people understand an effect. Then they can put their own values on it.

The number needed to treat, takes the change in absolute change and turns it upside down. (Instead of calculating the difference out of 100, it's 100 divided by the difference.) So that instead of the constant denominator of 100, you now have ones that change: instead of 60% of people being hyped-up because of the movie, it becomes NNT 1.7 (1.7 people have to see the movie for 1 person to get hyped-up).

This can be great in some circumstances, and many people are really used to NNTs. But on average, this is one of the hardest effect measures to understand. Which means that it's easier to be manipulated by it.

NNT is the anti-RR if you like: RRs exaggerate, NNTs minimize. Both can mislead - and that can be unintentional or deliberate.

When it comes to communicating with people who need to use results, I think using only statistics that will frequently mislead because it's a preference of the communicator is paternalistic, because it denies people the right to an impression based on their own values. Like all forms of paternalism, that's sometimes justified. But there's a problem when it becomes the norm.

The NNT was developed in the 1990s [

PDF]. It was meant to do a few things - including counteracting the exaggeration of the RR. Turns out it overshot the mark there! It was also intended to be easier to understand than the odds ratio (OR).

The OR brings us to the crux of the language problems. People use words like odds, risks, and chances interchangeably. Aaarrrggghhh!

A risk in statistics is what we think of as our chances of being in the group: a 60% absolute risk means a 60 in 100 (or 6 in 10) "chance".

An odds ratio in statistics is like odds in horse-racing and other gambling. It factors in both the odds of "winning" versus the odds of "losing". (If you want to really get your head around this, check out

Know Your Chances by Woloshin, Schwartz, and Welch. It's a book that's been

shown in trials to work!)

The odds ratio is a complicated thing to understand, especially if it's embedded in confusing language. It's a very sound way to deal with data from some types of studies, though. So you see odds ratios a lot in

meta-analyses. (If you're stumped about getting a sense of proportion in a meta-analysis, look at the number of events and the number of participants - they are the natural frequencies.)

There's one problem that all of these ways of portraying risks/chances have in common: when people start putting them in sentences, they frequently get the language wrong. So they can end up communicating something entirely other than what was intended. You really need to double-check exactly what the number is, if you want to protect yourself from getting the wrong impression.

OK, then, so what about "pictures" to portray numbers? Can that get us past the problems of words and numbers? Graphs, smile-y versus frown-y faces, and the like? Many think this is "the" answer.

But...

This is going to be useful in some circumstances, misleading in others.

Gerd Gigerenzer and Adrian Edwards: "Pictorial representations of risk are not immune to manipulation either". (A topic for another time, although I deal with it a little in the "5 shortcuts" post listed below.)

Where does all this leave us? Few researchers reporting data have the time to invest in keeping up with the literature on communicating numbers - so while we can plug away at improving the quality of reporting of statistics, there's no overnight solution there.

Getting the hang of the common statistics yourself is one way. But the two most useful all-purpose strategies could involve detecting bias.

One is to sharpen your skills at detecting people's ideological biases and use of spin. Be on full alert when you can see someone is utterly convinced and trying to persuade you with all their chips on a particular way of looking at data - especially if it's data on a single outcome. If the question matters to you,

beware of the too-simple answer.

The second? Be on full alert when you see something you really want, or don't want, to believe. The biggest bias we have to deal with is our own.

More of my posts relevant to this theme:

Does It Work? Beware of the Too-Simple Answer

At Absolutely Maybe (PLOS Blogs):

5 Shortcuts to Keep Data on Risks in Perspective

Mind your "p"s, RRs, and NNTs: On Good Statistics Behavior

At Third Opinion (MedPage Today):

The Trouble With Evidence-Based Medicine, the 'Brand'

The NNT: An Overhyped and Confusing Statistic

Check out

hildabastian.net for a running summary of what I'm writing about.